Category: technology

-

Convert Text to Audio on Linux – Coqui TTS

In a separate post on my blog, I documented a simple way to convert text to audio using Google’s Text-To-Speech service. This works for small projects. But, during a recent conversion, I ran into an error where my requests were getting blocked by Google for using the service too much. I didn’t realize they capped…

-

Best 3D Printed Sunglasses Clip for Car Visor

I’ve needed a place to put sunglasses in my car for years. My sunglasses have typically ended up in one of the cup holders. Recently, it dawned on me that I could print a clip or holder that would attach to my sun visor to hold my sunglasses. I looked at the various 3D design…

-

Redacting PDFs on Linux

I had an odd situation pop up where I needed to redact a PDF. It wasn’t anything nefarious or super exciting, but it did involve a complicated PDF that included images and tables in addition to text. In figuring out how to redact the PDF, I ended up learning a few things and thought it…

-

Fixing Zotero Corrupt Database Issue on Linux

I use Zotero to manage my references and have for decades since it was first developed. I’m a huge fan and teach all my students how to use it. But I also don’t follow one of the programmers’ recommendations on where to store my Zotero database. As soon as I install Zotero, I change the…

-

Kubuntu 25.04 – Reset Wayland

I just installed Kubuntu 25.04 on my laptop and started running into an issue. It started when I tried to load Spectacle to take a screenshot. Spectacle froze and so did my panel, but I could still hit Alt+F2 to open new programs. I could also use Alt+Tab to switch between non-frozen programs. I couldn’t…

-

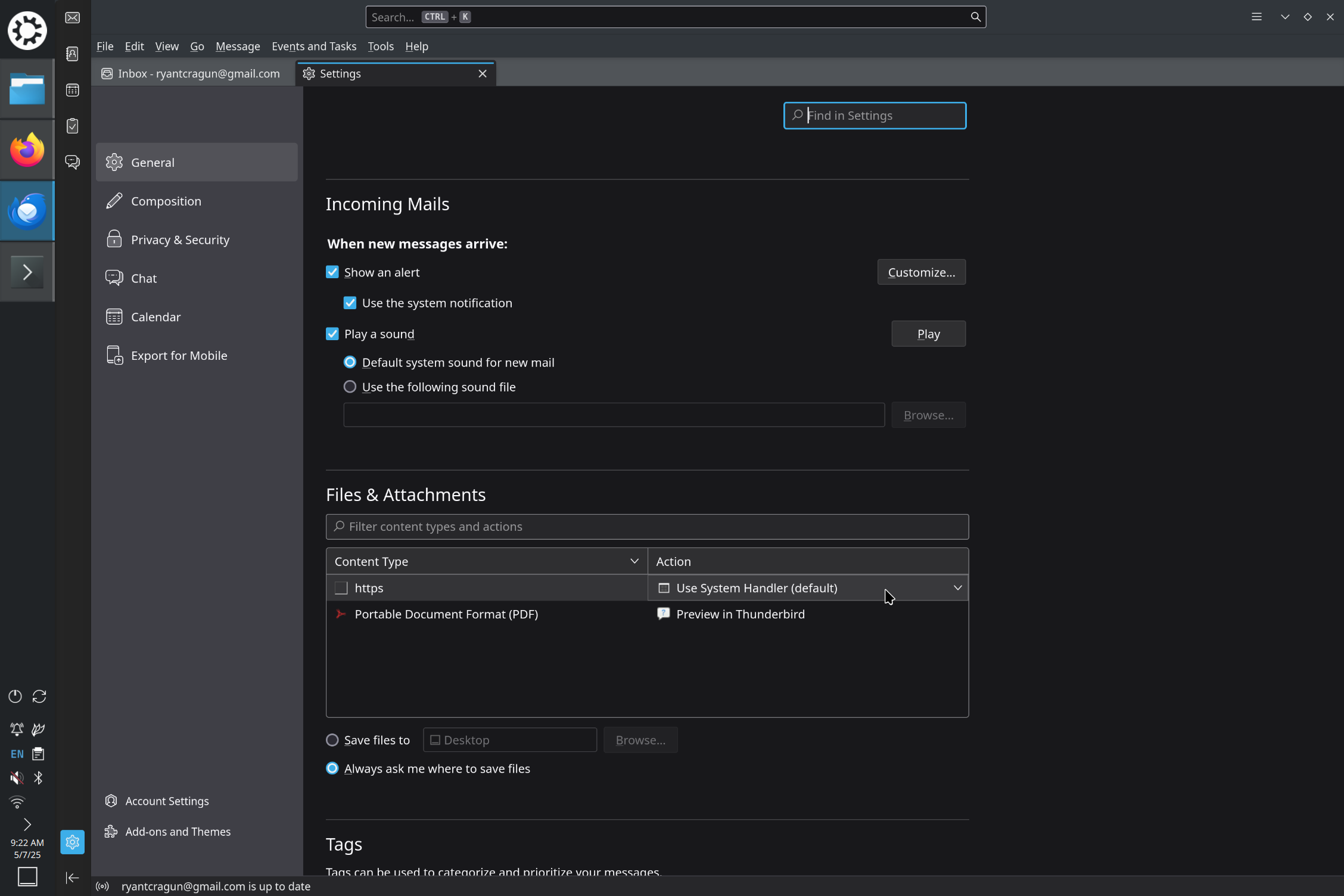

Changing Default Browser for Links in Thunderbird

I just reformatted my computer (I try to do that about once a year) to Kubuntu 25.04. That meant setting up Thunderbird (version 128.10.0esr) with my email again. I use Firefox as my default browser (because it respects privacy), but install Chromium as a backup for the occasional website that doesn’t work very well with…

-

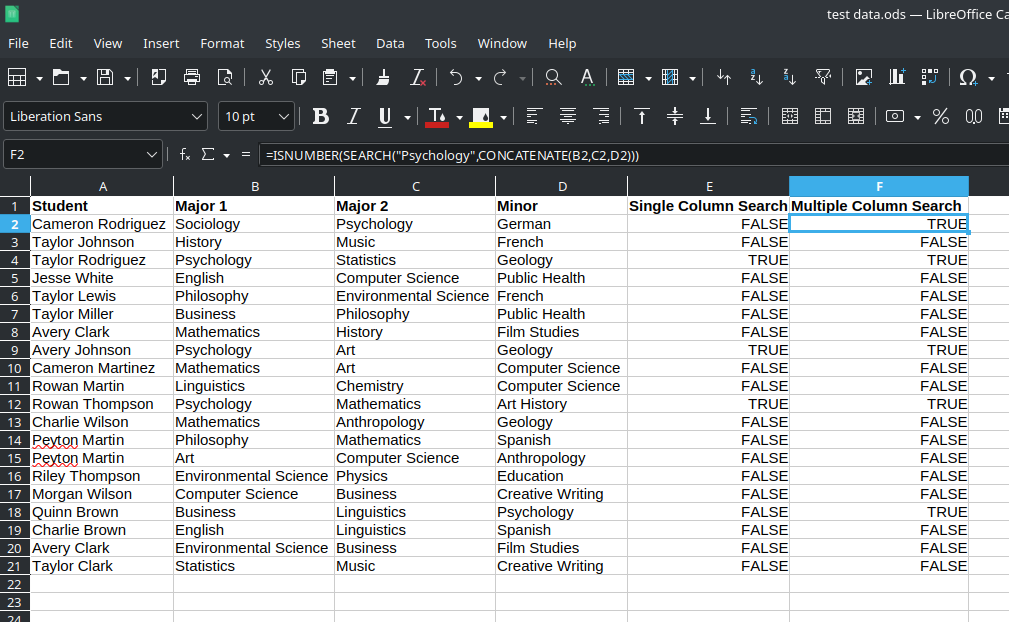

LibreOffice Calc – Search for Specific Text Across Columns

I have a task I am asked to do fairly regularly that involves searching through a large spreadsheet (around 4,000 rows with about a dozen columns) looking for very specific text. There are, of course, various ways I could accomplish this. But I finally found a solution that speeds this up and want to document…

-

Batch Convert MP4 to MKV

I ran into an issue where a number of my MP4 files on my fileserver were stuttering when I tried to play them back using Plex on my TV. After a lot of googling, I found out that this was due to some header information in the MP4 files and that the simple solution was…

-

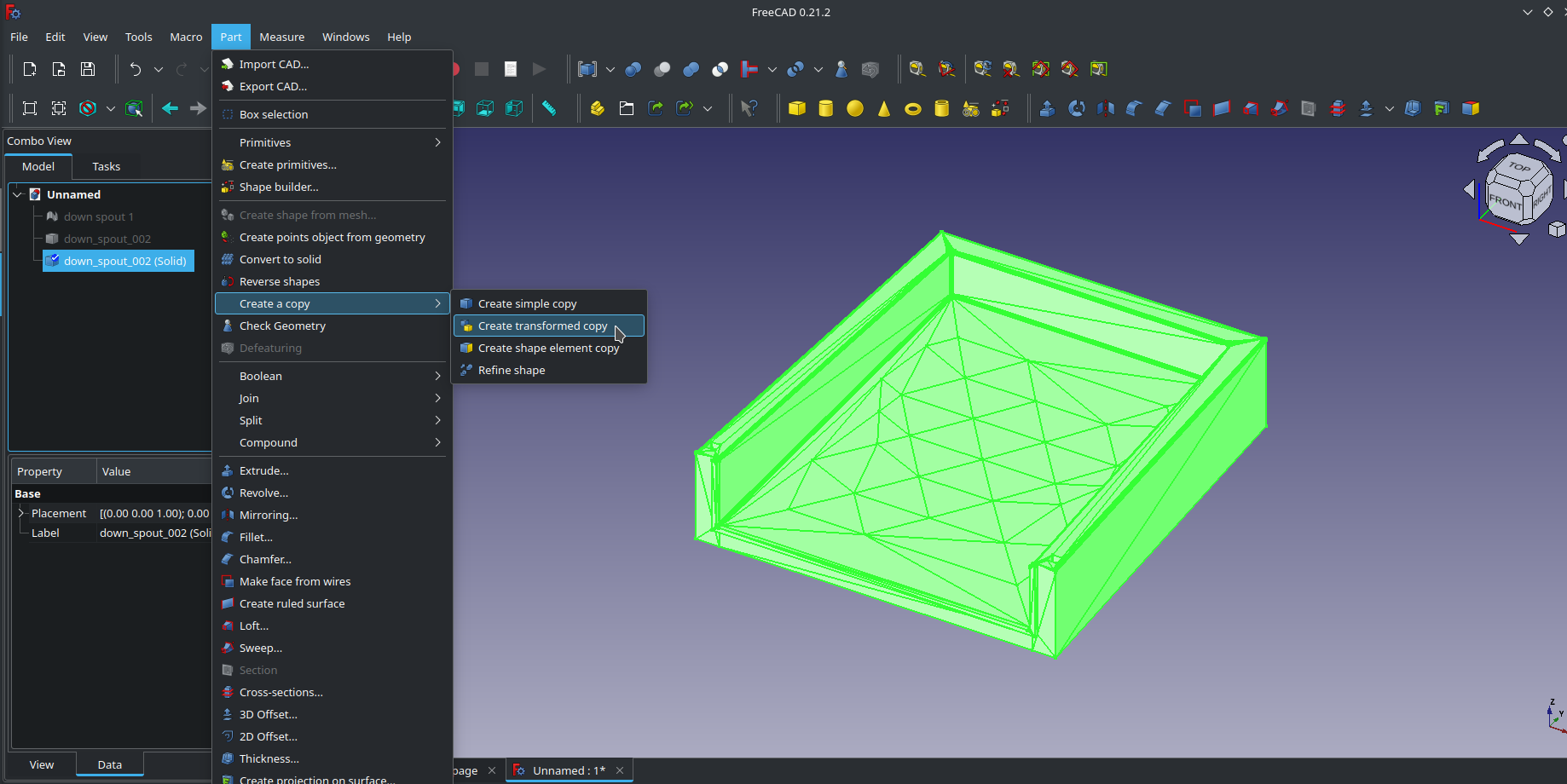

FreeCAD Import STL

I’m not a FreeCAD expert. I bought a 3D printer and am constantly coming up with new ideas for 3D prints to solve issues around my house and office. That required me to begin learning how to use CAD software and, as an open-source advocate, FreeCAD was the obvious choice for CAD. I design most…