Tag: Kubuntu

-

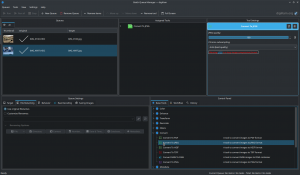

Getting Ollama and Open WebUI Working on Kubuntu 23.10

I have two specific use cases for setting up AI locally instead of using ChatGPT (for which I have a subscription as I use it for a variety of tasks, as does my wife). I needed to analyze interviews but couldn’t upload them to the web for security reasons. I also wanted to work on…

-

Kubuntu/Linux – batch convert HEIF images

My son recently went on a field trip. The teachers who went with him took hundreds of photos… on their iPhones. Since I want the photos of him, I downloaded all of them so I could skim through them and find the ones of my son. But I quickly realized that I was having issues…

-

Kubuntu 22.04 – Pending update of “firefox” snap

In the 22.04 version of Ubuntu/Kubuntu, Firefox was switched from a repository to a snap. I don’t know enough about the technical reasons for that, but it does mean that how Firefox is updated has changed. It also introduced a very annoying notification message that you’ve probably seen (and which is why you’re here: Switching…

-

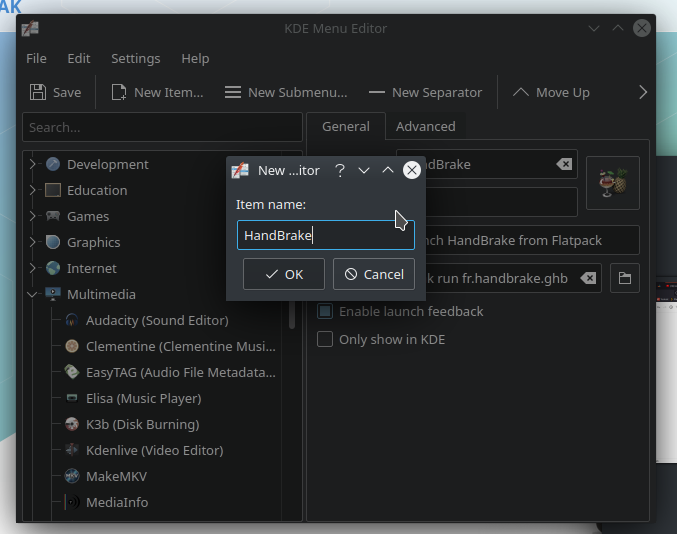

Launching HandBrake 1.4.0 in Kubuntu 21.04

The fine folks at HandBrake have updated their software and distribution system to a Flatpack approach. Their Flatpack for Linux is based on Gnome, not KDE, which is fine, but it does mean that the GUI is no longer skinned with my system settings on KDE. Oh well… The bigger issue is that, with a…

-

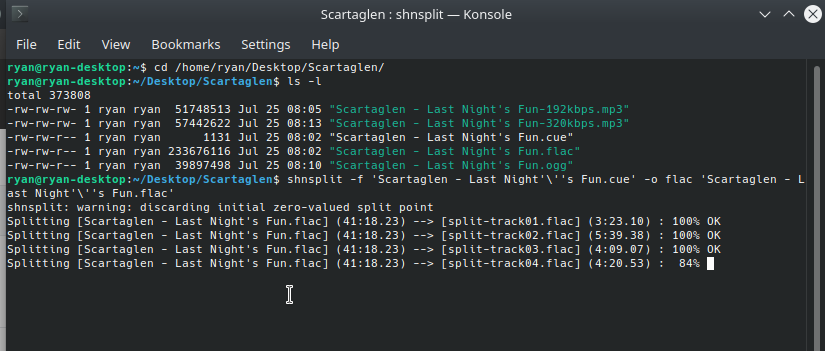

Kubuntu – Audio CD Ripping

I mostly buy digital audio these days. My preferred source is bandcamp as they provide files in FLAC (Free Lossless Audio Codec). However, I ended up buying a CD recently (Last Night’s Fun by Scartaglen) as there wasn’t a digital download available and, in the process, I realized that there are lots of options for…

-

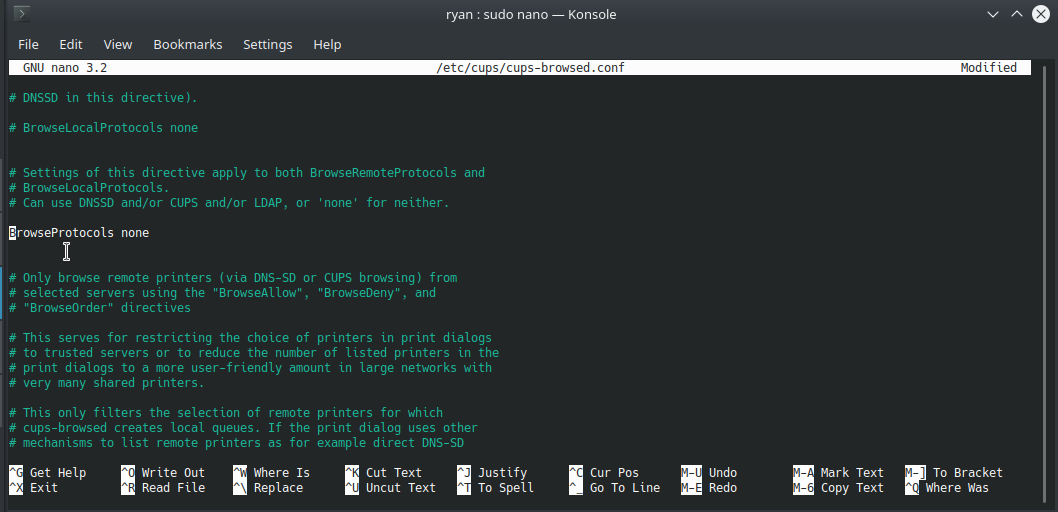

Linux/Kubuntu – Disable Network Printer Auto Discovery

I don’t know when Kubuntu started automatically discovering printers on networks and then adding them to my list of printers, but it is a problematic feature in certain environments – like universities (where I work). I set up my home printer on my laptop easy enough. But, whenever I open my laptop and connect to…

-

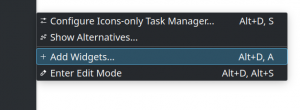

Restarting KDE’s Plasma Shell via Konsole (command line)

As much as I love KDE as my desktop environment (on top of Ubuntu, so Kubuntu), it does occasionally happen that the Plasma Shell freezes up (usually when I’ve been running my computer for quite a while then boot up a game and begin to push the graphics a bit. Often, I just shut down…

-

Building My Own NAS (with Plex, Crashplan, NFS file sharing, bitTorrent, etc.)

NOTE: As of 2020-06-22, I have a new NAS build. You can read about it here. For about the last seven years (since 2012), I’ve been using a Synology NAS (Network Attached Storage) device in my house as a central repository for files, photos, music, and movies. It has generally worked well. However, there have…