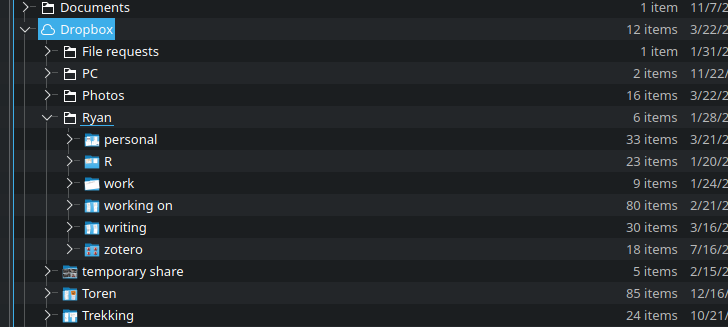

It took years to figure this out and it’s not free, but I don’t ever lose files anymore. Not losing files is worth the $240 I pay for these services…

![]()

It took years to figure this out and it’s not free, but I don’t ever lose files anymore. Not losing files is worth the $240 I pay for these services…

![]()

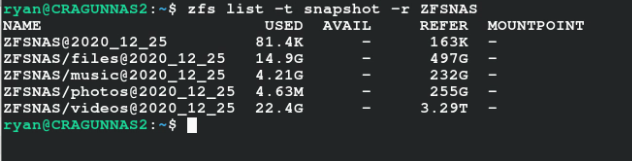

With my new fileserver/NAS, I am using a ZFS raid array for my file storage. One aspect of the ZFS file system that I didn’t really investigate all that closely…

![]()

After about a year and a half with my previous NAS (see here), I decided it was time for an upgrade. The previous NAS had served dutifully, but it was…

![]()

NOTE: As of 2020-06-22, I have a new NAS build. You can read about it here. For about the last seven years (since 2012), I’ve been using a Synology NAS…

![]()

If you’re using a Synology NAS box and would like to back up your files to offsite storage service Crashplan (which is relatively inexpensive), there is a relatively easy way to…

![]()

UPDATE: As of Ubuntu 13.04, these directions no longer work. I figured out a way to get this to work, however. See the updated directions at the bottom of this…

![]()